Ask Optimal Questions: Aligning Large Language Models with Retriever's Preference in Conversational Search

Chanwoong Yoon*,

Gangwoo Kim*,

Byeongguk Jeon,

Sungdong Kim,

Yohan Jo,

Jaewoo Kang

NAACL 2025 Findings.

Paper /

Code

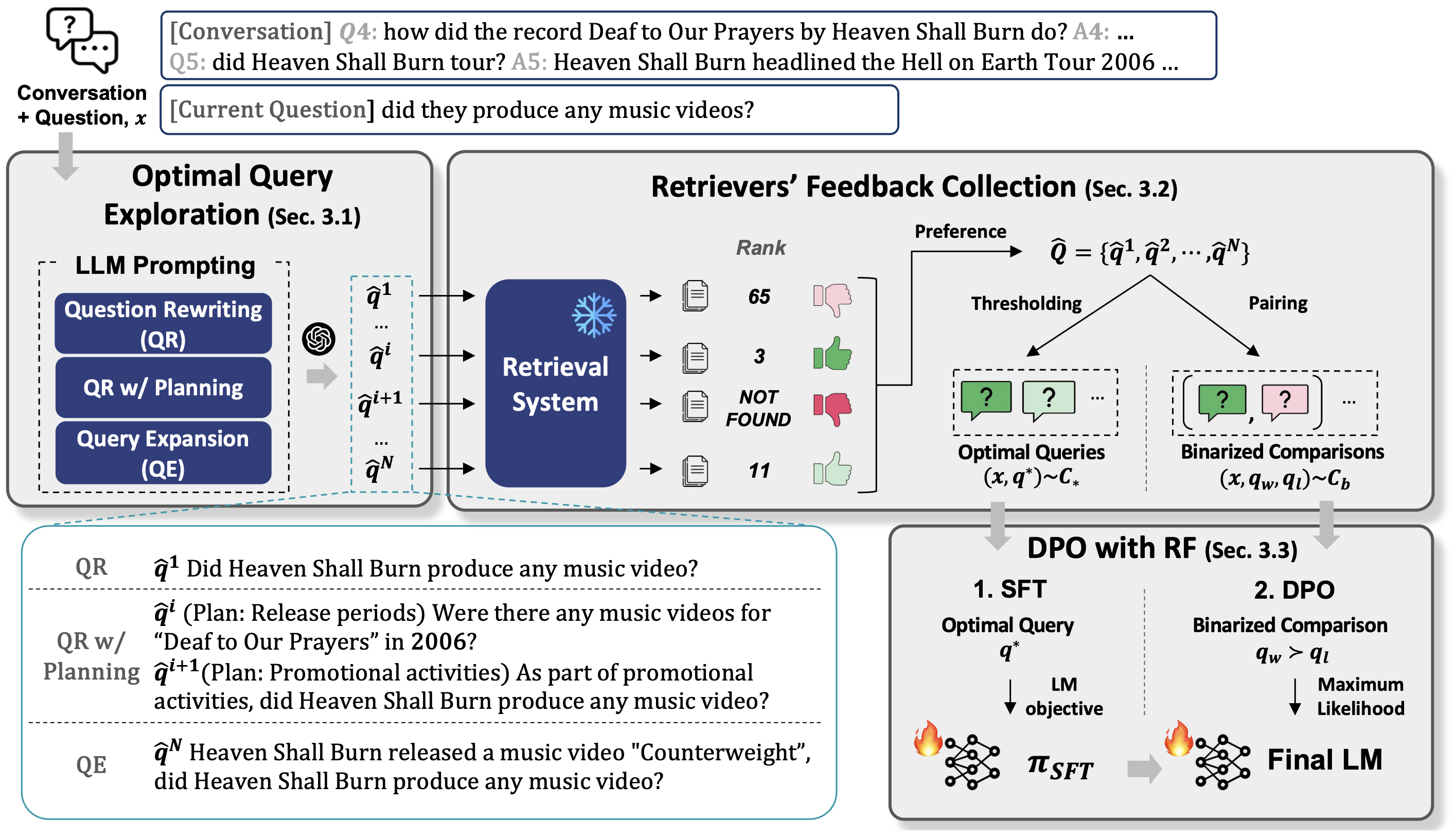

we present Retriever’s Preference Optimization (RetPO), which optimizes a language model (LM) for reformulating search queries in line with the preferences of the target retrieval systems.

CompAct: Compressing Retrieved Documents Actively for Question Answering

Chanwoong Yoon,

Taewhoo Lee,

Hyeon Hwang,

Minbyul Jeong,

Jaewoo Kang

EMNLP 2024 Main.

Paper /

Code

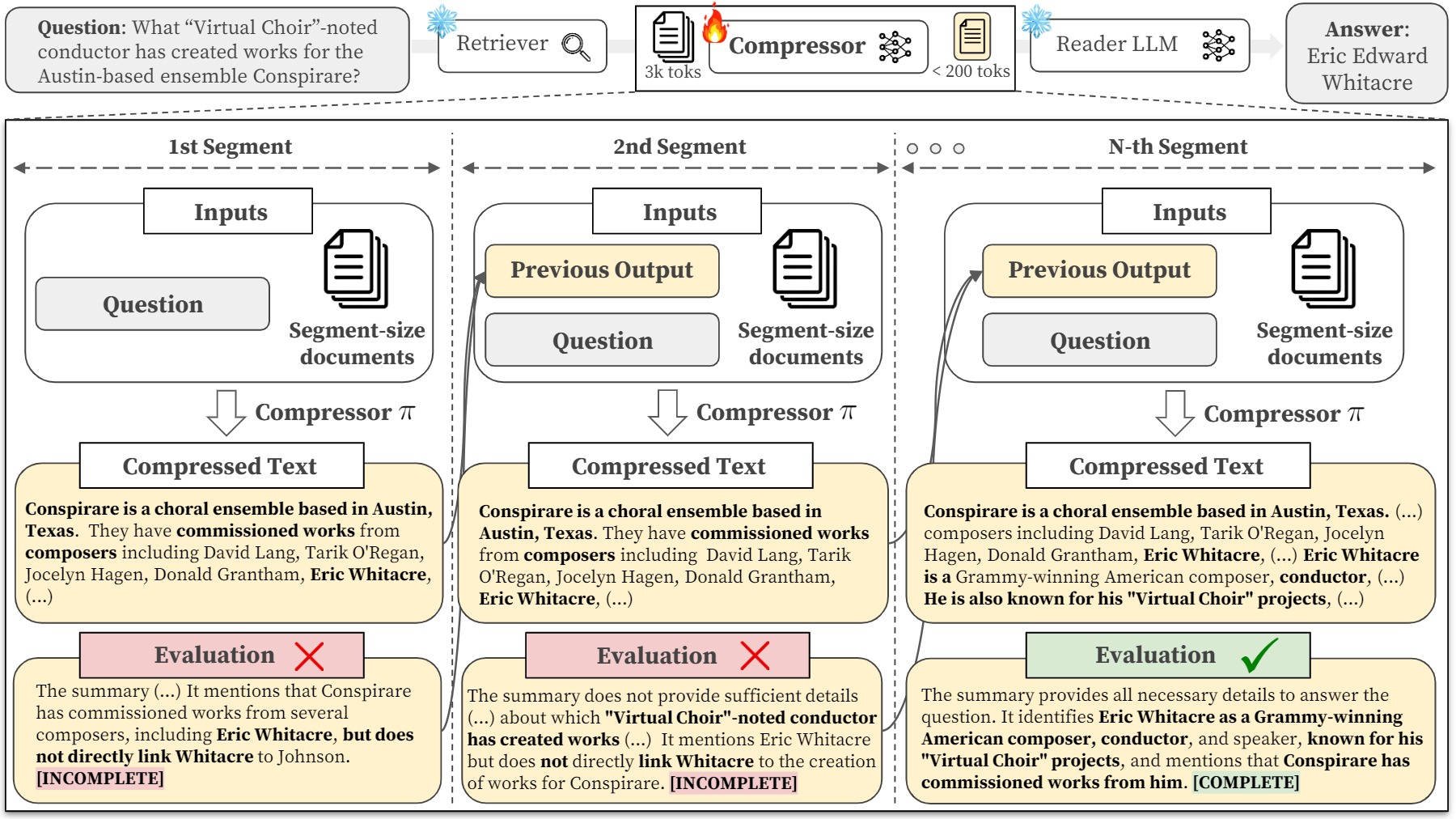

We propose a novel framework that employs an active strategy for compressing extensive documents. CompAct dynamically preserves query-related contexts, focusing on the integration of information across documents.

ETHIC: Evaluating Large Language Models on Long-Context Tasks with High Information Coverage

Taewhoo Lee,

Chanwoong Yoon,

Kyochul Jang,

Donghyun Lee,

Minju Song,

Hyunjae Kim,

Jaewoo Kang

NAACL 2025 Main.

Paper /

Code

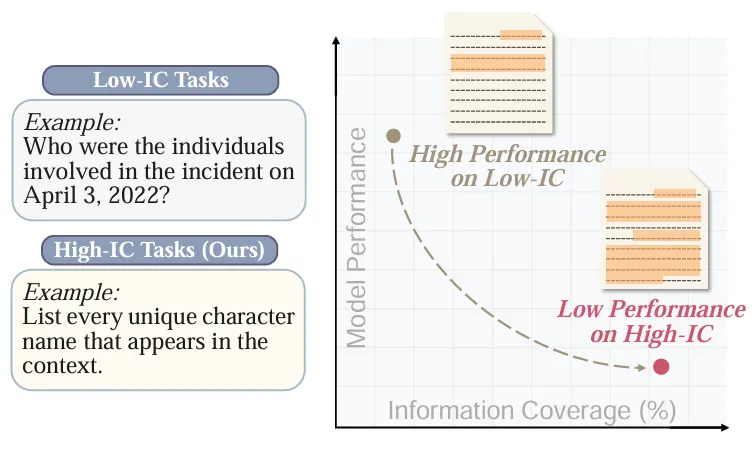

We introduce ETHIC, a new benchmark to evaluate the ability of large language models on long-context tasks that require high information coverage.

Does Time Have Its Place? Temporal Heads: Where Language Models Recall Time-specific Information

Yein Park,

Chanwoong Yoon,

Jungwoo Park,

Minbyul Jeong,

Jaewoo Kang

ACL 2025 Main.

Paper /

Code

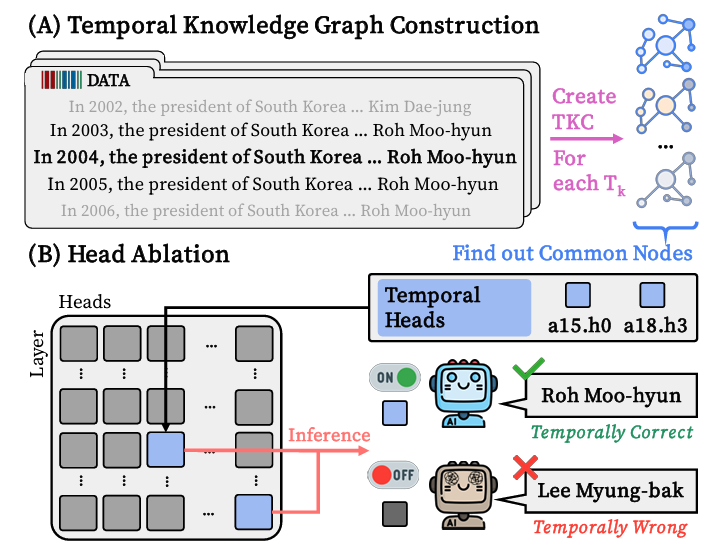

We introduce Temporal Heads, an investigation into the mechanisms by which language models recall time-specific information, identifying specific components responsible for temporal reasoning.

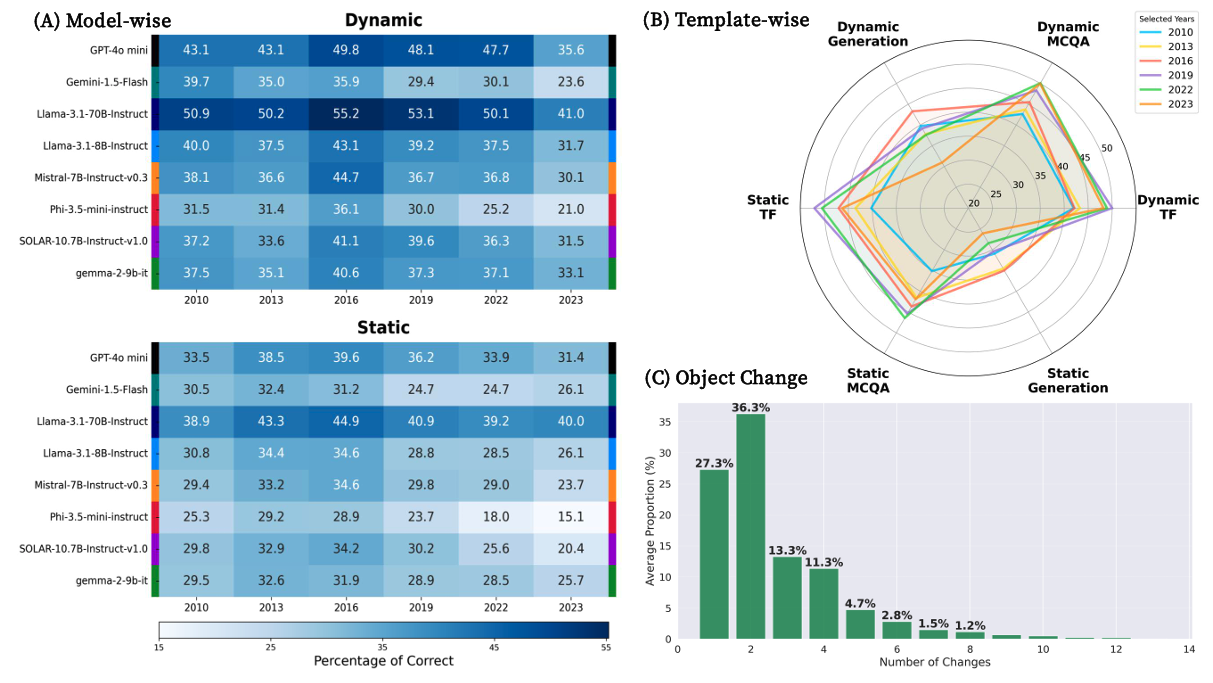

ChroKnowledge: Unveiling Chronological Knowledge of Language Models in Multiple Domains

Yein Park,

Chanwoong Yoon,

Jungwoo Park,

Donghyeon Lee,

Minbyul Jeong,

Jaewoo Kang

ICLR 2025.

Paper /

Code

We introduce ChroKnowledge, a comprehensive benchmark designed to evaluate the chronological knowledge of language models across multiple diverse domains.

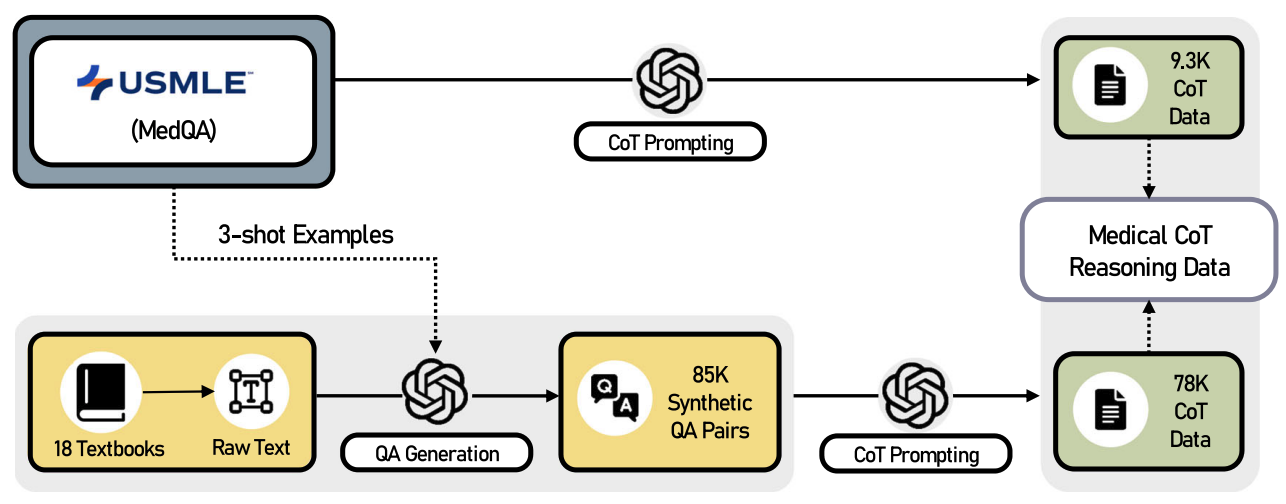

Small language models learn enhanced reasoning skills from medical textbooks

NPJ digital medicine.

Paper /

Model

We released a new medical LM, Meerkat-7B, passed the United States Medical Licensing Examination (USMLE) for the first time among 7B-parameter models. This study demonstrates that small language models can achieve enhanced medical reasoning abilities through targeted training on specialized medical textbooks.